The development of society is characterised by an acceleration in the production and dissemination of knowledge and information. Today, the amount of information produced daily in the world is greater than the capacity of a human brain to absorb it in a lifetime. Very often we receive more information than we want and can process.

How many useless messages do we receive every day? In addition to quantity, the quality of the information produced raises questions. Distorted, falsified or biased information can lead us to make poor choices and decisions. In this context, scientific studies can be useful to answer research questions objectively and reliably. However, not all scientific studies are the same, and some studies may have many biases. Do the authors of the study have a conflict of interest? What are the sources of funding and other support (such as supplies)? What is the role of the funders? How was the sample size determined?

Do the results presented have the same value if there is only one subject, twenty or a hundred? Have the authors provided sufficient detail in the protocol to allow the same study to be repeated and the results to be verified? Did they use a relevant statistical method based on the data collected? etc... In order to assess the scientific reliability of the research data presented, we determine a level of scientific evidence of the studies selected to determine the relevance of the solution studied.

The scientific evidence

To determine the level of scientific evidence of the studies, we objectively assess the type of study, any bias, and any errors made by the authors of the studies (is the protocol sufficient to reproduce the study and verify the results? Were the data collected and processed in a relevant way? Did the authors respect the national code of ethics and the code of conduct for integrity in research?), conflicts of interest (does any of the authors of the study have an interest in obtaining a particular result? Is one of the authors funded by a commercial company that has an interest in obtaining a particular result?), etc., in order to define the level of methodological quality of the studies.

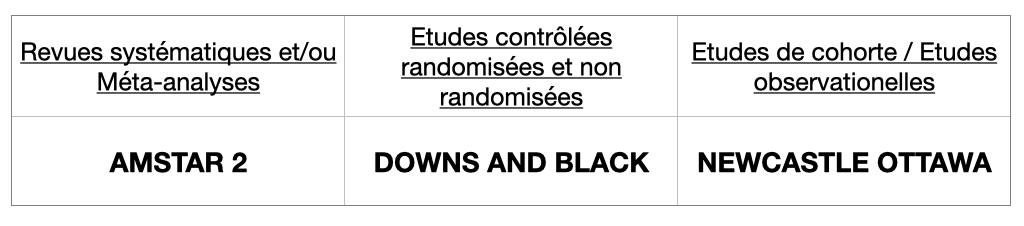

Several grids for assessing methodological bias have been proposed by scientific societies. For our analyses, we use the grids presented in the table below.

The levels of scientific evidence vary from 1 (the highest level) to 4 (the lowest) and are classified as follows:

1++: High quality meta-analyses, systematic reviews of randomised controlled trials or randomised controlled studies at very low risk of bias

1+: Well conducted meta-analyses, systematic reviews of randomised controlled trials, or randomised controlled trials with a low risk of bias

1-: Meta-analyses, systematic reviews or randomised controlled trials, or randomised controlled trials with a high risk of bias

2++: High quality systematic reviews of case-control or cohort studies or high quality case-control or cohort studies with a very low risk of confounding, bias, or chance and a high probability that the relationship is causal

2+: Well conducted case-control or cohort studies with a low risk of confounding, bias, or chance and a moderate probability that the relationship is causal

2-: Case-control or cohort studies with a high risk of confounding, bias, or chance and a significant risk that the relationship is not causal

3: Non-analytic studies, eg case reports, case series

4: Expert opinion

Once the level of evidence has been determined, the next step is to form an objective and independent opinion about the solution being studied: are the results strong enough to recommend it? What are the side effects, adverse effects? What is the cost/benefit ratio?